On Wednesday, former OpenAI Chief Scientist Ilya Sutskever announced that he is creating a new company called Safe Superintelligence, Inc. (SSI) with the goal of safely building “superintelligence,” which is a hypothetical form of artificial intelligence that surpasses human intelligence, perhaps in the extreme.

“We will pursue superintelligence in one straight shot, with one focus, one goal, and one product,” Sutskever wrote on X. “We’ll do it through revolutionary breakthroughs produced by a small crack team.“

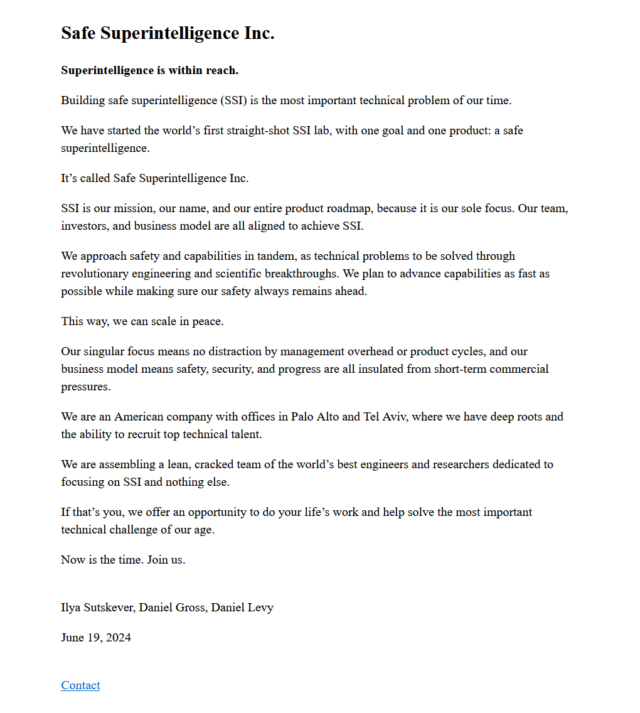

Sutskever was a founding member of OpenAI and previously served as the company’s chief scientist. Two others are joining Sutskever at SSI initially: Daniel Levy, who previously led the Optimization Team at OpenAI, and Daniel Gross, an AI investor who worked on machine learning projects at Apple between 2013 and 2017. The trio posted a statement on the company’s new website.

Sutskever and several of his associates resigned from OpenAI in May, six months after Sutskever played a key role in ousting OpenAI CEO Sam Altman, who later returned. While Sutskever did not publicly complain about OpenAI after his departure—and OpenAI executives like Altman wished him well in his new adventures—another resigned member of OpenAI’s Superalignment team, Jan Leike, publicly complained that “during in recent years, safety culture and processes [had] moved away from shiny products” to OpenAI. Leike joined OpenAI competitor Anthropic later in May.

A nebulous concept

OpenAI is currently looking to create AGI, or artificial general intelligence, which would hypothetically match human intelligence in performing a wide variety of tasks without specific training. Sutskever hopes to leap beyond that into a straightforward endeavor, with no distractions along the way.

“This company is unique in that its first product will be secure superintelligence, and it won’t do anything else until then,” Sutskever said in an interview with Bloomberg. “It will be completely insulated from outside pressures to deal with a large, complicated product and get stuck in a competitive rat race.”

During his previous work at OpenAI, Sutskever was part of the Superalignment team studying how to “align” (shape the behavior of) this hypothetical form of AI, sometimes called “ASI” for “artificial super intelligence.” , to be useful to him. humanity.

As you can imagine, it’s hard to line up something that doesn’t exist, so Sutskever’s research has met with skepticism at times. In X, University of Washington computer science professor (and frequent OpenAI critic) Pedro Domingos wrote, “Ilya Sutskever’s new company is guaranteed to succeed, because the superintelligence that is never achieved is guaranteed to be safe.“

Like AGI, superintelligence is a nebulous term. Since the mechanics of human intelligence are still poorly understood—and since human intelligence is difficult to quantify or define because there is no specific type of human intelligence—identifying superintelligence when it arrives can be tricky.

Computers already outperform humans in many forms of information processing (such as basic math), but are they superintelligent? Many proponents of superintelligence imagine a sci-fi scenario of an “alien intelligence” with some form of sentience that operates independently of humans, and that’s pretty much what Sutskever hopes to achieve and safely control.

“You’re talking about a giant super data center that is autonomously developing technology,” he told Bloomberg. “That’s crazy, isn’t it? It’s the security we want to contribute to.”